Nick Merich

Growth operator with exits. Zero-to-one to scale.

15+ years building and scaling startups in music tech, web3, and AI. Exited founder (Songlink → Linktree). Head of Growth at VC-backed startups. Founder Mentor at Carnegie Mellon & Run Club Leader.

OUTCOMES

TESTIMONIALS

Kurt Weiberth

Linktree Staff Engineer / ex. PayPal, Songlink (acquisition) / Former Business Partner

Nick single-handedly turned Songlink from a side project into a business. Prior to partnering with him the platform was a useful tool for friends and family with slow organic growth. Nick immediately identified the core user segment that would drive revenue and created a growth flywheel. He then coupled relentless determination with natural charisma to grow a network of recording artists, managers and labels who started using Songlink as part of their release strategies. This community drove our user and revenue growth, which led to profitability and an eventual Linktree acquisition. Oh, and most importantly he's a genuinely good person. Don't hesitate to work with Nick.

Patrick Sweetman

Top 1% Music Tech Engineer // Founder @ Recoup Al, Al Agent x Onchain Engineering Leader / ex Amazon

Nick is a standout leader in the onchain music industry. His unique blend of experience and innovation allows him to successfully launch new products, from building effective growth strategies to nurturing artist relationships. As he spearheads his new growth company, his profound expertise will undoubtedly build some of the most diverse projects. I'm confident that entrusting your product to Nick and his team means embarking an a path fo substantial growth and success?

Garrett Hughes

Co-founder @ Mint Songs (acquired Napster) / Engineering Leader @ Dune Analytics

Nick's leadership in our team was inspiring and encouraged us to achieve our best potential. He was responsible for community development, where he demonstrated an innate ability to build, nurture and grow communities that still thrive today. I had the pleasure of working together at Mint Songs as a colleague for a year. During that time, he served as the Director of Growth and displayed an exceptional comprehension and execution in Growth strategies, community development, user growth, as well as brand strategy that significantly contributed to our projects' success. Nick's dedication, ingeniously paired with his business acumen and creative marketing skills, brought a considerable boost in user growth for our brand. Nick is a powerhouse of Growth and Marketing strategies, an expert in building partnerships, and a maestro in developing communities.

Eric Johnson

COO at Session / ex OpenSea, Spotify, Mint Songs

Nick Merich is a master of growth marketing, partnerships, and community development. Working together at an early-stage startup, his expertise in crafting effective growth strategies and brand development was a game-changer for the business.

Nathan Pham

CEO @ Fanfly / ex United Masters, Napster, Pandora

Nick is one of the best growth marketers I've worked with. He's so equipped and well rounded to grow product and user base of any size! After a year of working together at Mint Songs, I definitely look forward to working with him again in the future.

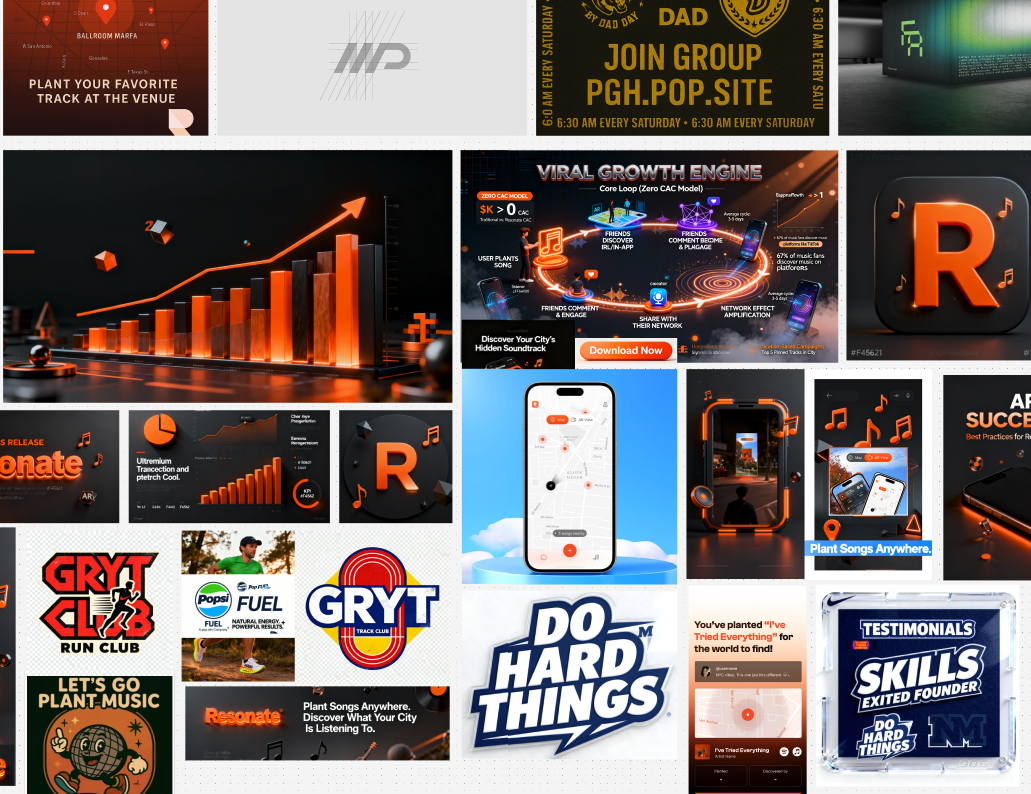

WORK

Co-Founder

Forest Ave.

Sept 2023 - Present

Co-Founder

Songlink (Acquired by Linktree)

March 2016 - 2021

Entrepreneurship Mentor (Startup Advisor)

Carnegie Mellon University, Swartz Center

2020 - Present

Director of Growth

Mint Songs (Acquired by Napster)

2022-2023

Head of Growth

Kits AI (a16z backed)

2023-2024

Co-Founder

Andocia Creative Agency (Acquired)

2014-2019

Head of Run Clubs

Dad Day

Feb 2025 - Now

Growth Advisor

Pop Site

Sept 2025 - Present

SATURDAY AT 6 AM

Dad Day Run Club

Every Saturday we meet in the South Hills, Pittsburgh PA to run 45 mins total @ 9-10 pace - Trails, Road, Mixed. Join Community below.

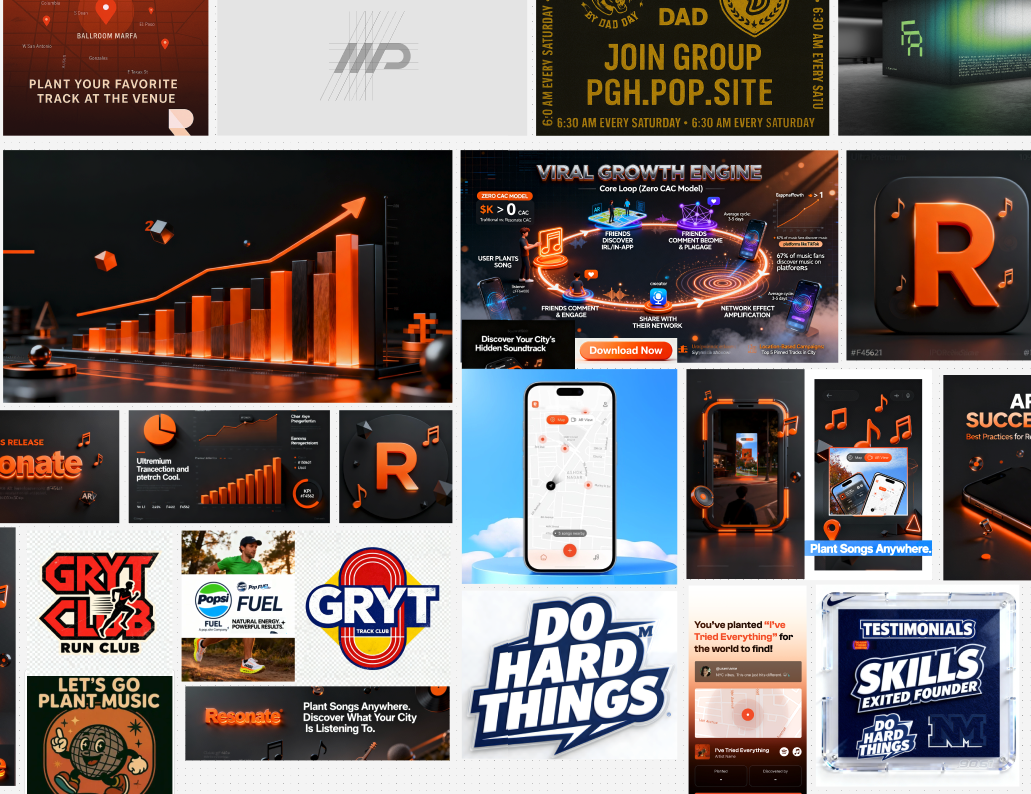

Director of Growth @ Mint Songs

Mint Songs (acquired by Napster): Built artist onboarding engine that grew user base 40% MoM, drove $30K NFT sales in 4 months, and scaled community to 20K Twitter followers, 0-15K Discord members, scaled YouTube 0-5k subscribers, produced 8+ Live Minting x Creation Events in LA, NYC, Pittsburgh.

Head of Growth @ Kits

Kits AI (a16z backed): Scaled Kits.io (sample pack marketplace), signed the first 8 licensed AI voice major, and contributed to building viral organic and paid growth loops with partners leading to millions of users.

Senior Growth Manager @ Trainwell

Trainwell: Ran $32K influencer campaign generating above average new subscriptions at $32 CAC, owned content and email, created new NIL Athlete Influencer Vertical, ran comedy and productive podcast ads finding new ways to scale growth without hurting retention.

Growth Partner @ Creatives Drink

Creatives Drink is a live case of a creative-driven beverage or lifestyle brand. Produced and Sold hundreds of Customized Cocktail Boxes partnered with industry leading city brands year around.

SIDE QUESTS

coming soon

coming soon

coming soon

coming soon

coming soon

coming soon